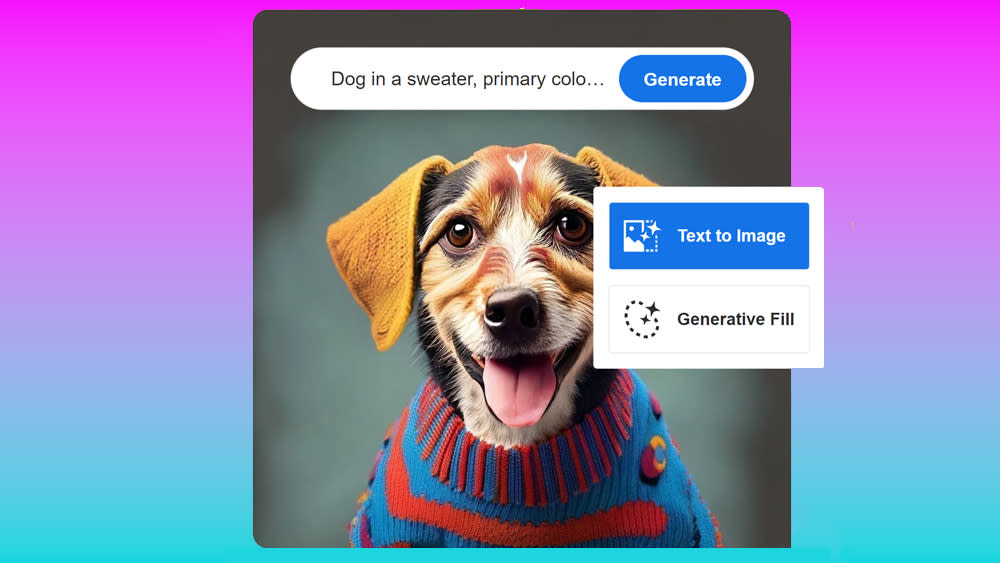

So Adobe Firefly AI isn't as squeaky clean as it seemed

Adobe's Firefly AI has two big selling points over the rest of the best AI art generators. First, it's practical for creative workflows, powering tools integrated in existing software like Photoshop and Illustrator. Second, it's been billed as being commercially safe. But it turns out that it has a few skeletons in its closet... or rather in its training data.

Adobe has made much of the point that Firefly was trained on public domain material and images from Adobe Stock, its own library of licensed assets. In theory, that makes it more ethical than the AI image generators that were trained by scraping the entire web, sucking up artists' and photographers' work without permission. So reports that Firefly was also trained on Midjourney images are a little embarrassing for the software giant.

An article by Rachel Metz for Bloomberg has revealed that Firefly's training data featured images generated by AI, including by models such as Midjourney. The report doesn't suggest that Adobe scraped these images; it accept's Adobe's claim that it used Adobe Stock as the source of its training data. The catch is that Adobe Stock itself contains AI-generated images submitted by contributors.

Adobe believes around 5% of the images used to train Firefly were generated by AI. It stresses that all of the images accepted for inclusion in Adobe Stock "including a very small subset of images generated with AI" go through a "rigorous moderation process to ensure it does not include IP, trademarks, recognizable characters or logos, or reference artists’ names." However, several artists have questioned this after finding AI-generated images on the platform appearing in searches for their own names.

Perhaps the news shouldn't surprise us. Adobe began accepting AI-generated content on Adobe Stock back in 2022, and there are now over 50 million images tagged as AI-generated. But Adobe could have excluded these had it wanted to. After all, they're tagged as AI-generated.

According to anonymous sources at Adobe cited by Bloomberg, there were internal disputes on this, with some questioning the ethics of using the AI-generated imagery. However, the article mentions a message on Discord from Adobe Stock 'Contributor Evangelist' Mat Hayward, arguing that AI-generated imagery “enhances our dataset training model". Hayward also noted that Adobe Stock contributors who submitted AI-generated imagery would qualify for Adobe's 'Firefly bonus', which it paid to contributors whose content was used to train the first public version of the AI model.

Is Firefly commercially safe?

So is Firefly AI commercially safe to use? Adobe says it is, and it's so convinced of it that its Enterprise plan offers indemnification in the event that users get sued for using content that it produces. The new revelation probably doesn't change anything in this sense since Firefly's own output is twice removed from the original copyright material.

However, it isn't exactly great for Adobe's claim that Firefly is different. The tech giant may not have trained its AI image generator on unlicensed images, but it trained it on images produced by generators that were. "Synthetic laundering," some people are calling it... or "AI incest".

For Metz, the most controversial aspect is that it contradict's the way Adobe has presented Firefly. "This is not about data contamination— it’s about the company making a deliberate choice to include AI-generated images from Adobe Stock in its dataset while publicly positioning itself as very different from the companies whose tools were used to make those AI-generated images," she wrote in a response on X.

The reality is that, if you're going to use AI image generation, Adobe Firefly remains one of the most ethically sound options available, if such a thing exists. It's also the most useful. As well as vouching for where its training material came from, Adobe has also been active in developing tools to help track the providence of AI generated images, adding Content Credentials to Adobe Firefly content so that it can more easily be identified as AI generated.

Other options with ethical claims include Generative AI by Getty Images, which was built with Nvidia and is exclusively trained from licensed content in the Getty Images library creative library. Contributors also receive remuneration on a recurring basis when they have their content in the training set.

As for Adobe, the company is now working on an AI video generator, and it seems to be following the approach of using licensed contributions. It's even paying artists to create material specifically to train the model, although its price isn't exactly generous: Bloomberg has reported that it's offering around $3 per minute for footage to be used in perpetuity without credit (UPDATE: Adobe has just released a preview of new AI video editing tools for Premiere Pro).

In the meantime, Adobe continues to improve Firefly, adding tools like Structure Reference, and it's been introducing AI-powered tools in other programs, such as Adobe Substance 3D.

Leaders in media and entertainment appear to be largely optimistic about how AI will affect their sectors. Autodesk's 2024 State of Design and Make report finds executive rank an "ability to work with AI" as the priority skill for future employees.