Humans Could Develop a Sixth Sense, Scientists Say

Scientists in Japan have proven that humans may have a sixth sense: echolocation.

Fifteen participants used tablets to generate sound waves, just like bats, to figure out if a 3D cylinder was rotating or standing still.

The results show that humans are better at recognizing moving objects than idle ones.

Humans have some seriously limited senses. We can’t smell as well as dogs, see as many colors as mantis shrimp, or find our way home using the Earth’s magnetic poles like sea turtles. But there’s one animal sense that we could soon master: bat-like echolocation.

Scientists in Japan recently demonstrated this feat in the lab, proving humans can use echolocation—or the ability to locate objects through sound—to identify the shape and rotation of various objects. That could help us stealthily “see” in the dark, whether we’re sneaking downstairs for a midnight snack or heading into combat.

As bats swoop around objects, they send out high-pitched sound waves from distinct angles that bounce back at different time intervals. This helps the tiny mammals learn more about the geometry, texture, or movement of an object.

If humans could similarly recognize these time-varying acoustic patterns, it could quite literally expand how we see the world, says Miwa Sumiya, Ph.D., the first author of the new study, which appears in Plos One.

“Examining how humans can acquire new sensing abilities to recognize environments using sounds [i.e., echolocation] may lead to the understanding of the flexibility of human brains,” Sumiya, a researcher at the Center for Information and Neural Networks in Osaka, Japan, tells Pop Mech. “We may also be able to gain insights into sensing strategies of other species [like bats] by comparing with knowledge gained in studies on human echolocation.”

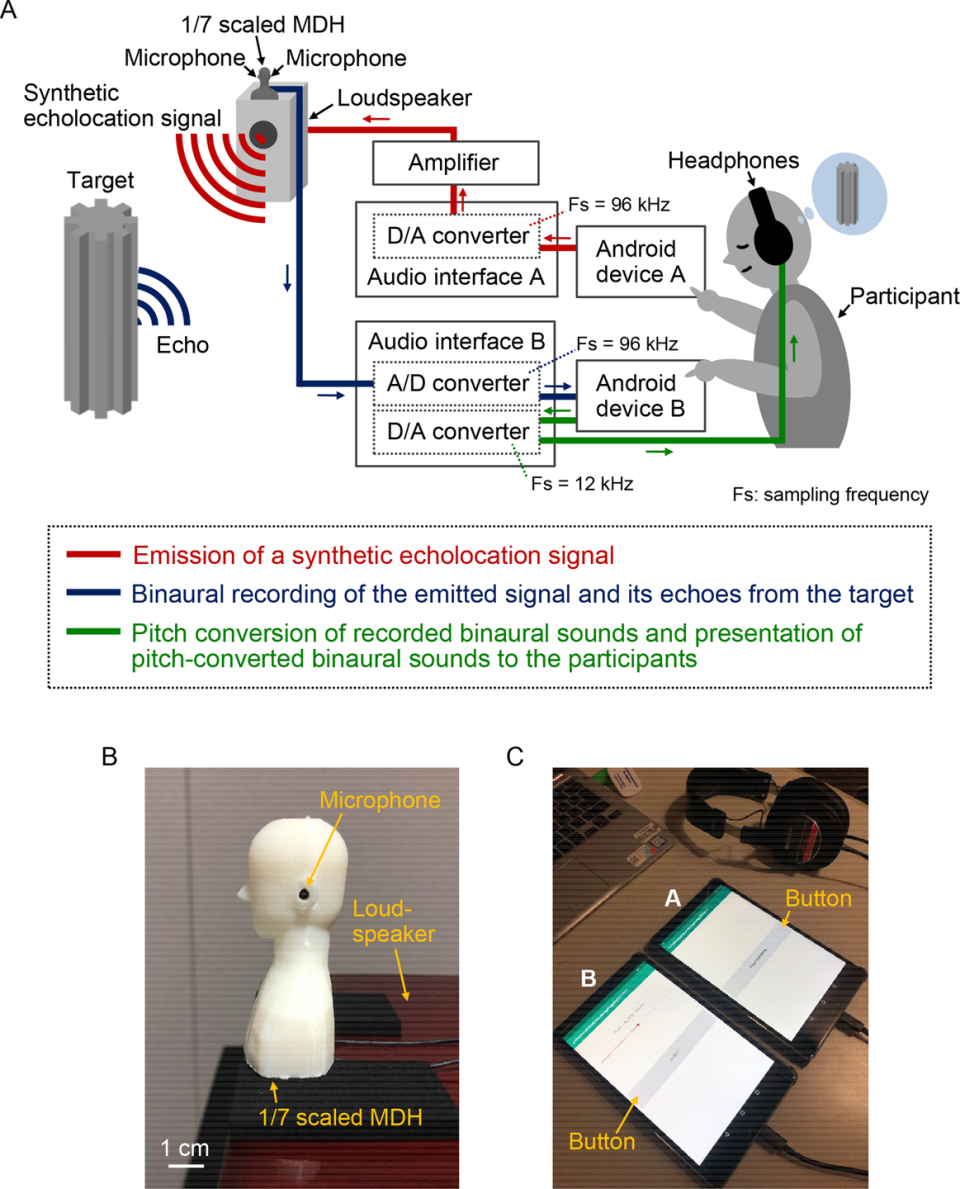

To test this theory out, Sumiya’s team created an elaborate setup. In one room, the researchers gave participants a pair of headphones and two different tablets—one to generate their synthetic echolocation signal, and the other to listen to the recorded echoes. In a second room (not visible to participants), two oddly shaped, 3D cylinders would either rotate or stand still.

When prompted, the 15 participants initiated their echolocation signals through the tablet. Their sound waves released in pulses, traveled into the second room, and hit the 3D cylinders.

It took a bit of creativity to transform the sound waves back into something the human participants could recognize. “The synthetic echolocation signal used in this study included high-frequency signals up to 41 kHz that humans cannot listen to,” Sumiya explains.

The researchers employed a miniature dummy head (about one-seventh the size of your actual cranium) to “listen” to the sounds in the second room before transmitting them back to the human participants. Yes, really.

The dummy head featured two microphones—one in each ear. That, in turn, rendered the echoes binaural, meaning 3D. It’s a bit like the surround sound you might experience at a movie theater. Lowering the frequency of these binaural echoes meant the human participants could hear them “with the sensation of listening to real spatial sounds in a 3D space.”

That makes sense; there's a whole cult-like following around podcasts and videos that use binaural microphones to produce surround sound and a tingly feeling in the ears, known as the autonomous sensory meridian response, or ASMR.

Finally, the researchers asked participants to determine whether the echoes they heard were from a rotating or a stationary object. In the end, participants could reliably identify the two cylinders using the time-varying echolocation signals bouncing off the rotating cylinders (using clues like timbre or pitch). They were less adept at identifying the shapes from the stationary cylinders.

This study isn’t the first to demonstrate echolocation in humans—previous work has shown that blind people can use mouth-clicking sounds to see 2D objects. But Sumiya says the study is the first to explore time-varying echolocation in particular.

The researchers say their work is evidence that both humans and bats are capable of interpreting objects through sound. In the future, engineers could seamlessly incorporate this tech into wearables like watches or glasses to soundlessly improve how those with visual impairments are able to navigate the world—minus the miniature head.

?? Now Watch This:

You Might Also Like