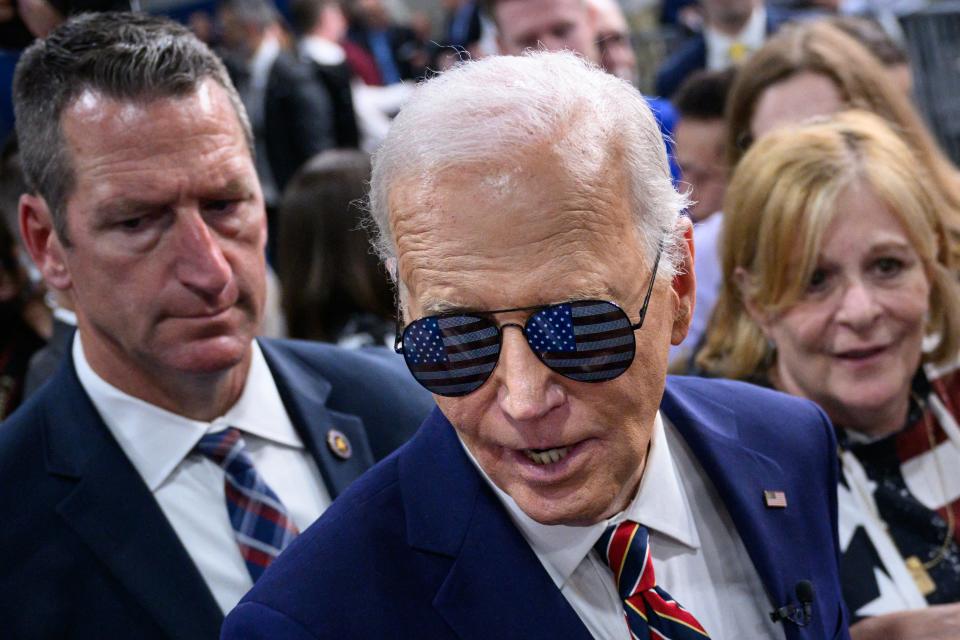

Political consultant charged with making Joe Biden robocalls in New Hampshire primary

WASHINGTON ? A Louisiana political consultant has been indicted for allegedly using an artificial-intelligence impersonation of President Joe Biden to urge Democrats not to vote in New Hampshire’s presidential primary last January, a top state official announced Thursday.

The Democratic consultant, Steven Kramer, was indicted by grand juries in four New Hampshire counties where potential voters received the robocalls after admitting to his involvement orchestrating them.

New Hampshire Attorney General John Formella said Kramer, 54, of New Orleans, LA, was indicted on charges of felony voter suppression and misdemeanor impersonation of a candidate.

Kramer faces a total of 26 criminal counts, half of them for alleged voter suppression, a felony charge, and the other 13 for impersonating a candidate, which is a misdemeanor under New Hampshire law.

Formella said the charges in the four separate counties were the result of investigations into robocalls received by potential voters living there. The indictments were handed up over the past month, he said.

The Federal Communications Commission on Thursday announced it was proposing a $6 million fine against Kramer for allegedly trying to defraud voters through the robocall scheme in violation of the federal Truth in Caller ID Act. The federal agency also said it was proposing a $2 million fine against Lingo Telecom for allegedly routing the calls without taking required steps to authenticate them. It said both will be given an opportunity to respond before the FCC acts further to enforce the penalties.

“I hope that our respective enforcement actions send a strong deterrent signal to anyone who might consider interfering with elections,” Formella said, “whether through the use of artificial intelligence or otherwise.”

Formella said the investigation “into the AI-Generated President Biden Robocalls, including other potentially responsible parties,” remains active and ongoing. The FCC said in announcing the proposed fines that it “continues its work in understanding and adjusting to the impacts of AI on robocalling and robotexting.”

Kramer could not be reached for comment. Lingo Telecom said in a statement to USA TODAY that it "strongly disagrees with the agency’s decision" to issue the proposed penalty.

"Lingo Telecom takes its regulatory obligations extremely seriously and has fully cooperated with federal and state agencies to assist with identifying the parties responsible for originating the New Hampshire robocall campaign," it said, adding that it was "not involved whatsoever in the production of these calls" and complied with all applicable federal regulations and industry standards.

More: AI deepfakes are part of the 2024 election. Will the federal government regulate them?

What launched the investigation?

Deepfakes, or videos or images that have been digitally created or changed with artificial intelligence or other technology, have been a concern of U.S. election officials for years.

Formella’s office announced Jan. 22 that it was opening an investigation into reports of thousands of New Hampshire residents receiving a robocall message asking them to “save [their] vote for the November election” and stating “[y]our vote makes a difference in November, not this Tuesday.”

The voice in the recorded message appeared to have been artificially generated to sound like the voice of Biden, Formella said in a news release Thursday announcing the charges.

“The message additionally appeared to have been ‘spoofed’ to falsely show that it had been sent by the treasurer of a political committee that had been supporting the New Hampshire Democratic Presidential Primary write-in efforts for President Biden,” Formella said.

Under New Hampshire law, it is illegal to knowingly attempt to prevent or deter another person from voting or registering to vote “based on fraudulent, deceptive, misleading, or spurious grounds or information.”

Kramer allegedly violated that felony statute by sending or causing to be sent a pre-recorded phone message that disguised the source of the call, deceptively using an artificially created voice of a candidate or providing misleading information in an attempt to deter 13 identified voters from voting in the state's Jan. 23 presidential primary, Formella said.

The misdemeanor charge alleges that when Kramer placed a call to each of the prospective voters, he “falsely represented” himself as a candidate for political office.

Who got the calls?

Multiple voters received the artificially generated message on the Sunday night just before the primary, telling them: “Your vote makes a difference in November, not this Tuesday.”

The call also included the personal phone number of Kathy Sullivan, a key leader in the effort encouraging Democrats to write in Biden’s name on the ballot.

"We know there are anti-democratic forces out there who are terrified by the energy of this grassroots movement to stop Donald Trump, but New Hampshire voters will not stand for any efforts to undermine our right to vote,” Sullivan said in a statement about the deepfake at the time. In a phone call with USA TODAY in January, Sullivan said she felt “disgust” and “disbelief” over the fake robocall and her personal information being leaked.

"Anyone who did this, if they think they're a patriot, you're not," Sullivan said. "You're not a good American, because you're trying to interfere with the fundamental processes of what makes our country different and better."

On Thursday, Sullivan thanked the New Hampshire Department of Justice for its “fast work” on the case. “Hopefully this indictment deters others,” she said in a post on X, formerly Twitter. “Hopefully I never ever have to deal w/ politics & criminal use of phones again.”

The indictments were first reported by NBC News. In a February interview with NBC, Kramer admitted to orchestrating the robocalls as an act of civil disobedience to call attention to the dangers of AI in politics.

More: Exclusive: Homeland Security ramping up 'with intensity' to respond to election threats

Kramer told NBC the scheme was his own idea and had nothing to do with his client, Biden's long-shot primary challenger, Rep. Dean Phillips, D-Minn.

In February, the New York Times reported that Kramer said he had hired technology and marketing consultant Paul Carpenter to produce the audio for the calls using an AI tool, and that Carpenter said he was not aware of how the audio would be used.

How big of a problem are election-related robocalls?

Miles Taylor, a former senior Department of Homeland Security official, said he and other cybersecurity experts have been bracing for the malicious use of deepfakes in the 2024 presidential election.

“We’ve been working with US officials on the expected surge in deepfakes,” Taylor said in a post on X, formerly known as Twitter. “This is just the beginning.”

Last June, Florida Gov. Ron DeSantis’ campaign reportedly used images of Trump embracing Dr. Anthony Fauci in a campaign video that forensic experts said were almost certainly realistic-looking deepfakes generated by artificial intelligence, the USA TODAY Network reported at the time.

A month before that, Sen. Richard Blumenthal, D-Conn., launched a Senate Judiciary Committee hearing into the potential pitfalls of deepfakes by playing an AI-generated recording that mimicked his voice and read a ChatGPT-generated script.

“If you were listening from home, you might have thought that voice was mine and the words from me,” the real Blumenthal said in revealing the deep fake, warning that the technology could be game-changing in terms of “the proliferation of disinformation, and the deepening of societal inequalities.”

One former Department of Homeland Security cyber official warned that the fake Biden call in New Hampshire could become the new normal given the rapid advances in technology and the lack of comprehensive government and private sector oversight.

“Obviously, there are risks with AI, particularly in the political sphere where there is a winner-takes-all issue like an election,” said the former official, who spoke on the condition of anonymity because of their current role with a social media company involved in protecting against deep fakes.

“That’s where you are going to see the most targeted and arguably most insecure uses of AI because the incentives for the players are absolutely to sort of kill the other guy. So you see them innovating quickly and incorporating new technologies,” the former Homeland Security official said. ”The problem is right now, we don't have all the answers about how to secure AI, or how to use AI for security. I think everyone is trying to get their legs on under them.”

Contributing: Savannah Kuchar

This article originally appeared on USA TODAY: Election robocalls imitating President Biden lead to criminal charges