AI-generated sexual images of Taylor Swift are 'alarming and terrible,' says Microsoft CEO Satya Nadella, but what can the tech giant do about it?

What you need to know

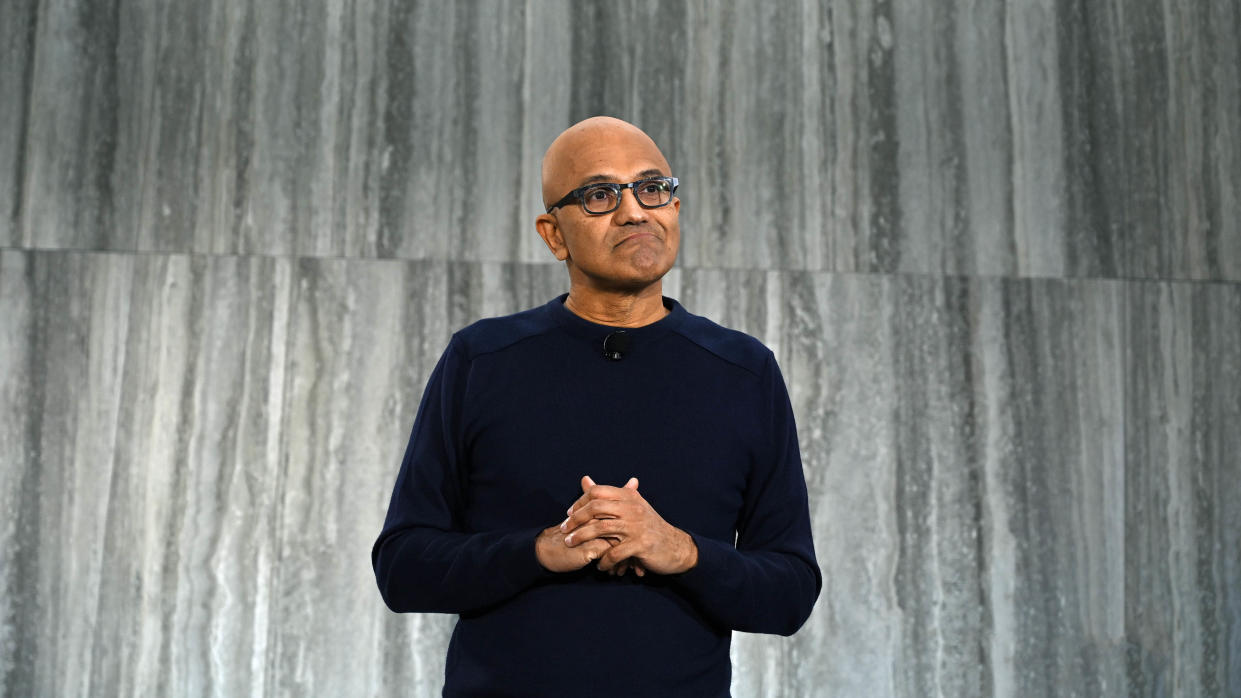

Microsoft CEO Satya Nadella spoke with NBC Nightly News about the emergence of AI-generated fake images of Taylor Swift that present Swift in a sexual manner.

Nadella called the situation "alarming and terrible" and said Microsoft and others need to act.

A report claims that Microsoft Designer was used to generate the images of Taylor Swift, though that information is not confirmed.

AI-generated images of Taylor Swift emerged online recently. The sexual nature of the fake photographs caused conflict and debate surrounding the use of artificial intelligence to create images of people without consent. The fact that the generated content was sexually explicit added a layer of complexity to the discussion.

Microsoft CEO Satya Nadella discussed the situation with NBC Nightly News in an interview and talked about what can be done to guide AI and guard against misuse.

Nadella said the following in his interview, which will air in its entirety on January 30, 2024:"

"I would say two things. One, is, again, I go back to, I think, what's our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced. And there’s a lot to be done and a lot being done there. And we can do, especially when you have law and law enforcement and tech platforms that can come together, I think we can govern a lot more than we think- we give ourselves credit for."

When asked if the fake Taylor Swift images "set alarm bells off," regarding what can be done, Nadella said, "first of all, absolutely this is alarming and terrible. And therefore, yes, we have to act."

Microsoft tools creating pornography

Microsoft is one of the largest names in AI, and it turns out that the tech giant's tools may be connected to the fake Taylor Swift images. A 404 Media report states that members of "Telegram group dedicated to abusive images of women" used Microsoft's AI-powered image creation tool to make the fake photographs of Swift.

Microsoft recently renamed Bing Image Creator to Image Creator from Designer. The 404 Media report did not specify which tool was used by members of the Telegram group, but there's a good chance it was Bing Image Creator/Image Creator from Designer.

That tool has guardrails in place to prevent pornographic images from being created, but there are ways to trick AI tools to create content that's supposed to be banned.

What can Microsoft do about AI-generated porn?

Read more

- Microsoft lobotomized Bing Image Creator

- Microsoft loosens Bing Image Creator censorship

Many will focus on whether the government should regulate AI-generated pornography. Microsoft's role and responsibility will also likely be a hot topic of discussion. But I want to focus on what Microsoft can do and what is already being done about AI-generated images.

As alluded to by Nadella, Microsoft can put guardrails in place that limit or restrict the types of content Copilot and other AI tools can create. These are already in place, but obviously they can be worked around. People manage to bypass AI limits for everything from harmless fun to generating revenge porn. Microsoft can add more guardrails and tighten restrictions, but there's a good chance that Microsoft's AI tools will always be able to be used to make content the tech giant wouldn't approve of.

There's a larger moral discussion surrounding who gets to decide what's appropriate, but I'll leave that for experts to talk about.

Even if Microsoft found a way to stop its tools from generating pornography or other controversial images, there are other AI tools available. Web-based AI tools such as Microsoft's Copilot and Google's Bard are easy to use, but they're far from the only artificial intelligence tools around.

So, what can Microsoft do to help users differentiate real images from fake photos? The tech giant can help develop technology that identifies AI-generated images. OpenAI is working on a tool that it claims can identify AI-generated images with 99% reliability.

Some social media sites already use similar tools to scan for sensitive images that are then flagged up. Of course, those tools aren't perfect either, since X (formerly Twitter) had a difficult time stopping the fake images of Taylor Swift from spreading.

Solve the daily Crossword