After a deluge of AI-powered products CES 2024 is making it tougher than ever to define what AI actually is

Jacob Ridley, senior hardware editor

This week I've been at CES 2024: Stomping around Vegas for a week has left my feet sore, but it was worth it to get a first look at so many new products. This year, there were an overwhelming number of products brandishing AI abilities, and I'm left wondering what to make of it all.

Another CES, another buzzword. In previous years it's been blockchain, metaverse, and putting the word 'smart' in front of everything, but you don't win anything for guessing what it is for 2024. Artificial intelligence is the talk of the town and there was almost no escaping it during my travels of the Las Vegas tech show.

It's easy to understand why AI is taking the world by storm. The expeditious success of ChatGPT and image generation has the whole world watching. Now, most major tech firms are thinking about how they can integrate AI into their products, like Microsoft and Copilot for Windows or Intel with its latest Meteor Lake Core Ultra processors.

There's money to be made in AI. Nvidia is a trillion dollar company thanks to the propensity of its GPUs to supercharge AI. The creator of ChatGPT, OpenAI, has received billions in funding. These companies are bringing something genuinely new to the table, but not all AI features are so clearly brand new.

Some of what I've seen advertised as AI over at CES 2024 looks like it could've been called a smart feature before the AI hype spiked, including sleep monitoring, automated timers, head tracking, and automatic mode switching.

Many of these features existed before the AI boom. Is there something going on beneath the surface that makes any one of them more deserving of the AI tagline or is this tricksy marketing?

What defines AI from other somewhat intelligent features is not altogether clear in many instances.

For some AI products, you'll find explicit references to training and learning, often with integration into a cloud-based system that takes on the brunt of the actual AI processing. Adobe, for example, offers AI algorithms that dabble in features its regular smart algorithms cannot, such as generative image creation. Admittedly, I still think the standard magic eraser tool is often more effective than its newer background removal, but I'm sure that'll change with time because the AI features can be improved with deployment of new models trained on new samples and feedback. It's this sort of training and feedback that begins to separate AI from traditional code made up of if statements and logic loops.

The MSI monitor pictured above also will come with an app capable of training the software to work with other games besides League of Legends.

In some other cases, I struggle to define what it is about 2024's AI products that supposedly differs from pre-existing smart features. The exact definition of artificial intelligence varies, especially in an age of AI and as new models and systems arrive. Though there are a few key terms to note.

The first is artificial general intelligence. This is defined as a system that is generally as smart, or smarter, than a human—it can achieve similar results in a wide-range of tasks to a human, if not better. That differs from how a bot designed to play Go might surpass a human today in its ability, as the bot is highly specialised. Most consider a true AI to be in its ability to outthink a human in many ways, through self-learning and decision making.

We're nowhere close to general intelligence yet, though you'll hear companies like OpenAI talk about trying to reach it often. Once a system claims to be as intelligent as a human it's likely that this form of AI will become the only one worthy of the name.

That raises the interesting question of whether what we constitute as AI changes as AI gets smarter. I'd hold that's true to some extent. The various chatbots launched prior to ChatGPT already appear pretty formulaic compared to newer versions, and I doubt if/when we reach general intelligence for AI we'll still class today's ChatGPT bot as artificial intelligence. Yet today it's probably the best we've got, at least of the publicly available models.

Stanford University's institute of Human-Centered Artificial Intelligence publishes a list of AI definitions [PDF warning] that includes one from emeritus professor John McCarthy in 1955. McCarthy defines AI as "the science and engineering of making intelligent machines." By this definition it's possible to define all manner of technologies over the past few decades to the advancing of AI. That's true, as AI wouldn't be here without decades of computer science, yet Stanford also appends its own definition to help clear things up.

"Much research has humans program machines to behave in a clever way, like playing chess, but, today, we emphasize machines that can learn, at least somewhat like human beings do."

What we're looking for is a machine that can match a human in some way and is able to learn.

The human bit of that definition is not all that applicable to the AI PCs and appliances over at CES 2024. These are working to a somewhat different definition. One that refers to learning and automation—what many would describe as machine learning.

Most definitions put machine learning as a subset of AI, and though related as they are, they're crucially different. Machine learning is about analysing data or images, recognising patterns, and using that to improve upon itself. The sort of thing you'll see applied with AI noise cancellation software.

As Microsoft outlines, machine learning and AI go together to make a genuinely useful machine. This machine learning lays the foundation of understanding for a device, and the AI harnesses that to make decisions. You can see this sort of symbiotic relationship in computer vision, where machine learning is used to teach a car about dangers on the road, but the job of hitting the brakes before crashing into a cow—imitating a human reaction—comes down to AI.

The difficulty is in how these definitions are then applied to products and how we apply them historically.

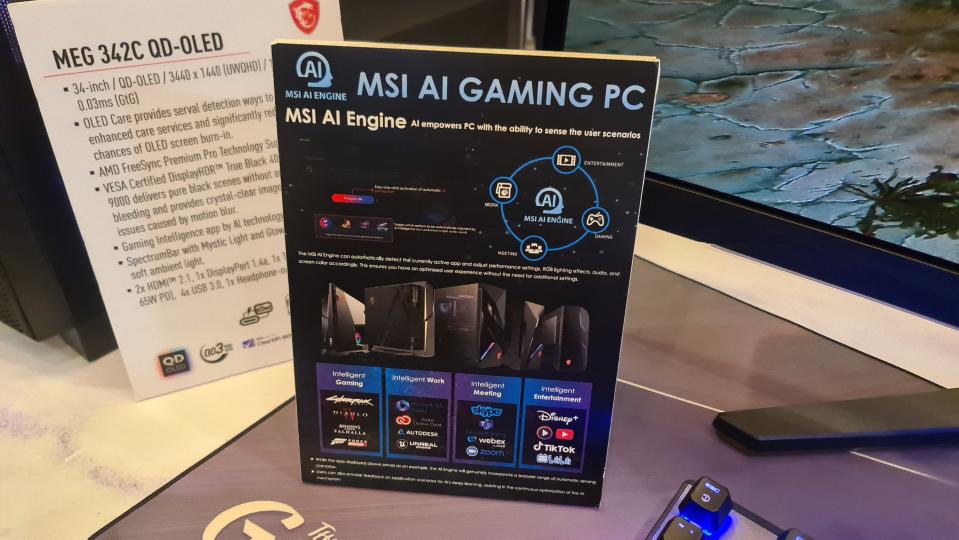

Take the MSI Prestige 16 AI Evo, for example. This is a laptop fitted with MSI's "AI Engine", which "detects user scenarios and automatically adjusts hardware settings to achieve the best performance." My question is how is this AI versus, say, Nvidia's GPU Boost algorithm? GPU boost uses various sensors on a graphics card to automatically boost performance where thermal and power constraints allow, but that feature has been around since 2012 and no one calls that AI, for good reason.

There's definitely some overlap between intelligent features that react to human input and those that are learning from it, and I'd be keen to know if MSI's profile switching feature actually learns from a user's actions to improve over time and that's what defines it as AI. That seems to be the best way I'm able to tell between artificially intelligent features from plain ol' smart ones.

Your next machine

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Furthermore, AI isn't altogether a local technology. For the most part, these AI features are made possible by massive data centres, and you could say the AI revolution is riding the wave of the cloud computing one. We are seeing more AI processing power located locally, as in on your own PC—Nvidia GPUs come with heaps of AI processing cores and Intel's latest Core Ultra chips feature an AI-accelerating NPU—but many of today's AI features require an internet connection. So, that sort of further muddies the water of what's actually an AI product and what's just tapping into an AI service elsewhere.

Difficult to define by its very nature, the various subsects of AI make smelling out the true use cases of AI even more difficult. The only practical conclusion I can come to is that stamping the catch-all term of 'AI' onto a product may as well be meaningless for the many and varied uses of what's classed as AI and machine learning today.

If you're looking for a new laptop, processor, toaster, or pillow, try to ignore the noise and look out for the genuinely effective features you'll actually use. The truly must-have AI and machine learning features should be pretty obvious.