Navigating AI Biases in The Classroom

Generative AI offers many advantages for educators, for use in and out of the classroom. Yet understanding the unintended biases that permeate within such a massive ecosystem is critical to effectively leveraging it.

As teachers and, yes, students will be using it, the question is do we want them to do so correctly, recognize bias, and navigate around it? The answer is indisputable.

Here are a few examples of why biases persist, and how to navigate around them.

Navigating AI Biases in The Classroom: The Problem

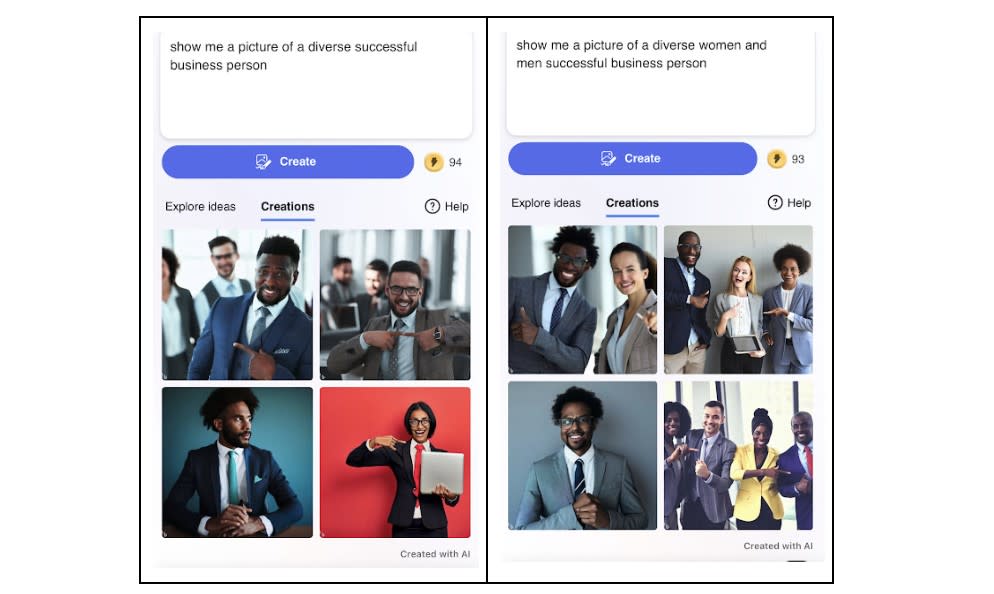

When I searched an AI image generator for "a picture of a successful business person," the results produced three out of four as white men. Only one image was of a Black woman.

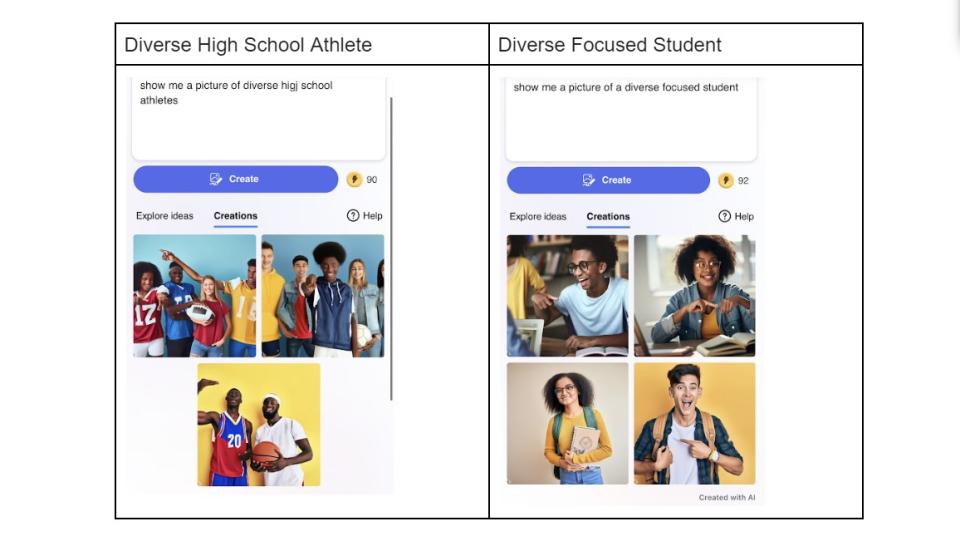

When I prompt for images of "a focused student," the first three are boys, two are white, the third is an Asian boy, and the fourth is an apparent high school female.

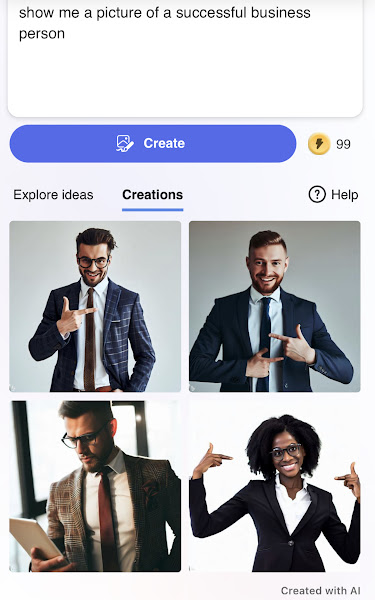

Next, I asked an AI image generator to create an image of a high school athlete. The results appeared to be both gender and racially biased as it produced three images. The first was a white male while the second two were Black and Brown male students.

Consider that these images were generated by AI's interpretation of my prompt.

If my anecdotal examples haven't convinced you, try for yourself, or refer to a respected resource on this subject, which characterizes how bias is becoming a more obvious concern while producing these realistic images.

Fortunately, there are tools that bring more transparency to AI systems and help demonstrate the need for navigating around implicit bias. For example, the Diffusion Bias Explorer compares images generated for a profession while the Average Diffusion Faces Tool showcases a common representation of faces across professions, based on AI-generated images.

The models reinforce concerning patterns linked to my own searches. This provides a compelling lesson to teach awareness of generative bias, both for teachers and students.

AI image generators, despite how clever they are, clearly present limitations. Bias in AI creation is not limited to images. Biased results surface in written command prompts too. Generative content producers are working to resolve this, however, AI continues to be characterized by a remarkably imperfect system of productivity.

Why? The answer is not that AI generators are innately biased. That would suggest they have some kind of sentient consciousness, and in fact, AI platforms simply have incredibly powerful mechanisms to comb the internet for what presently exists (sorry conspiracy theorists). In essence, this makes collected information static, yet dynamic in how AI returns the content.

Since access to content is fixed to what exists, it is biased because it draws on human-created data points.

An AI researcher explains that the issue lies in how AI generators establish extensive statistical connections between words and phrases. When these systems generate new language, it depends on these associations, which can produce the kind of bias in my search results.

Navigating AI Biases in The Classroom: The Solution

How we prompt AI generators affects our results.

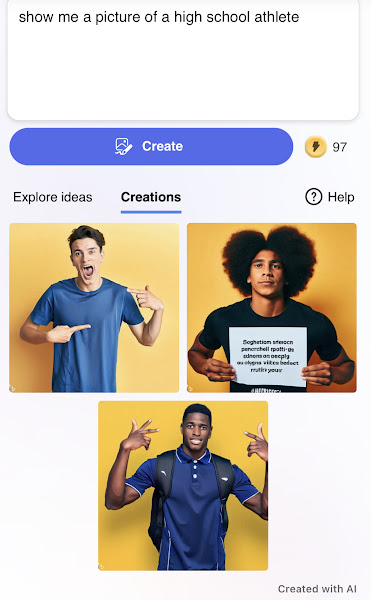

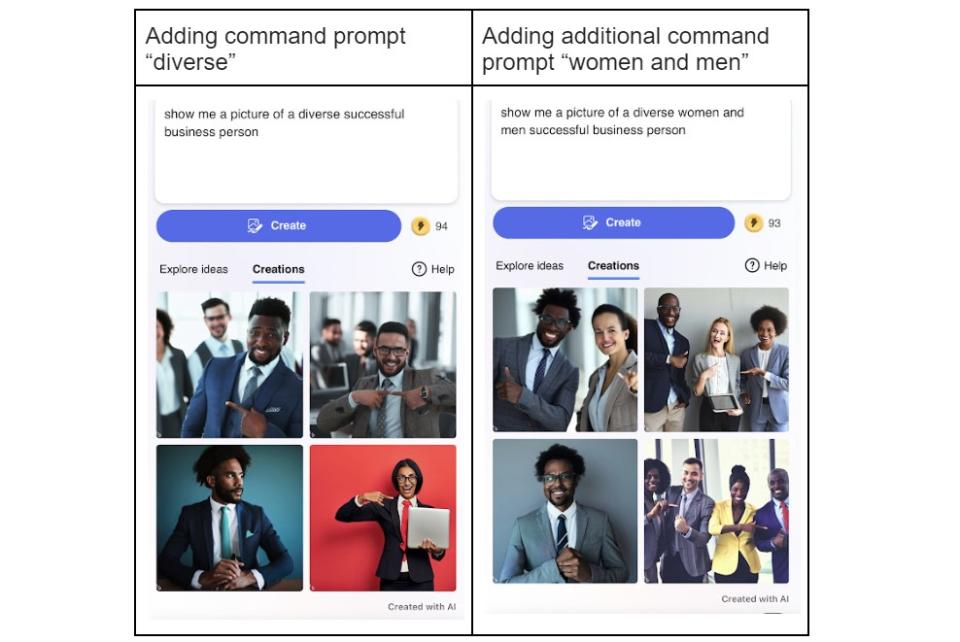

Let's take our three examples, and consider an additional word or two to refine the AI search to find something with better balance. In the first example, I added one word, "diverse." My first attempt netted me racial diversity, but all were men!

So I added " women and men" after "diverse" in the command. This produced more proportion.

I tried this for the two remaining test prompts, and the word "diverse" was sufficient in both, but the final prompt (high school athletes) still appeared a bit unbalanced.

AI presents incredible opportunities as I have written about. Being aware of the caution signs when using generative AI warrants merit, since the technology is here to stay.

Clearly, using targeted word prompts such as the term "diverse" to level our results aids our effort to harvest representative results for our student populations. Understanding this, helping teachers and students become acutely aware, and filtering out biased content are important lessons in accessing content with teaching and learning in unbiased ways.

Here are 10 examples of command prompts to steer toward unbiased content results.

10 Examples of Unbiased AI Prompts

"Write a short story about a diverse group of individuals working together to solve a global challenge."

"Compose a poem celebrating the beauty and strength of different cultures and backgrounds."

"Generate a piece of artwork that represents inclusivity and equality."

"Create a recipe that incorporates ingredients from various culinary traditions, highlighting the richness of diversity."

"Write a song that promotes understanding and empathy between people from different walks of life."

"Design a virtual reality experience that fosters a sense of unity and respect for all participants."

"Imagine a future society in which discrimination and prejudice have been eliminated, and describe its characteristics."

"Generate a persuasive speech advocating for equal rights and opportunities for all individuals."

"Develop a virtual assistant that provides unbiased information and recommendations without favoritism."

"Compose a letter to encourage open dialogue and constructive discussions on sensitive topics, promoting tolerance and mutual understanding."

To share your feedback and ideas on this article, consider joining our Tech & Learning online community here.