Personal voice in iOS 17 speaks with my voice and now I can't unhear it

If your iPhone sounded exactly like you, what would you make it say? That was my first question when personal voice started working with the iOS 17 beta on my iPhone 14 Pro. Could I put this to practical use, or would this be more useful for practical jokes? The technology behind personal voice is no joke, and even though it wasn’t good enough to fool my friends, it’s clear where this technology is headed, though it’s not clear the consequences when we get there.

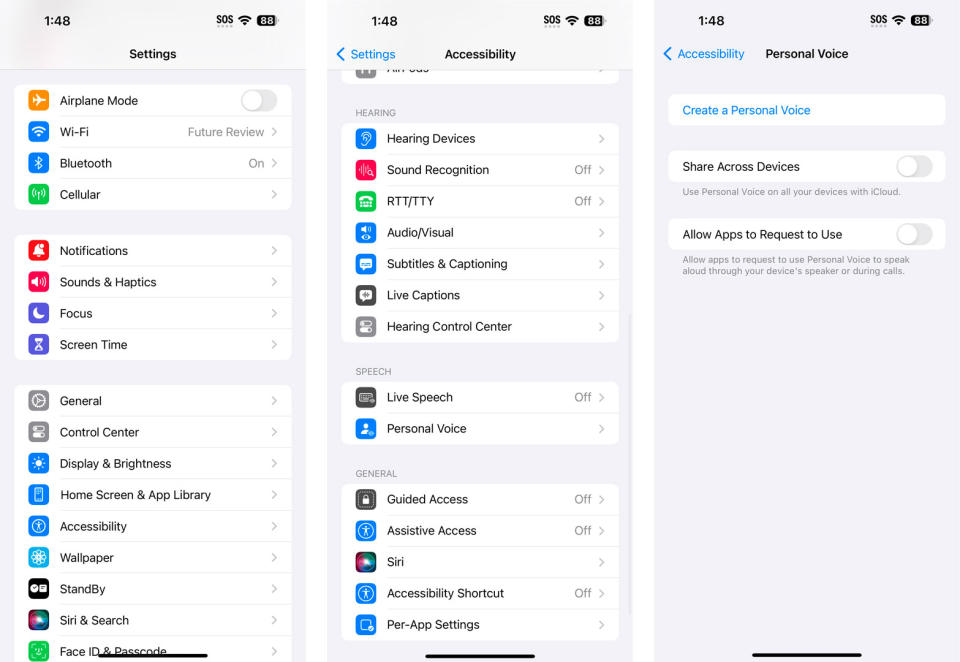

Personal voice, one of the most powerful and fascinating features of iOS 17, is now active and ready to try, though it isn’t for everyone, and I mean that sincerely. If you’re wondering why you might need the iPhone to speak with your voice, Personal Voice is an accessibility feature. It is meant to offer more speech options to people who have trouble speaking or are losing their voice through illness or injury, for instance.

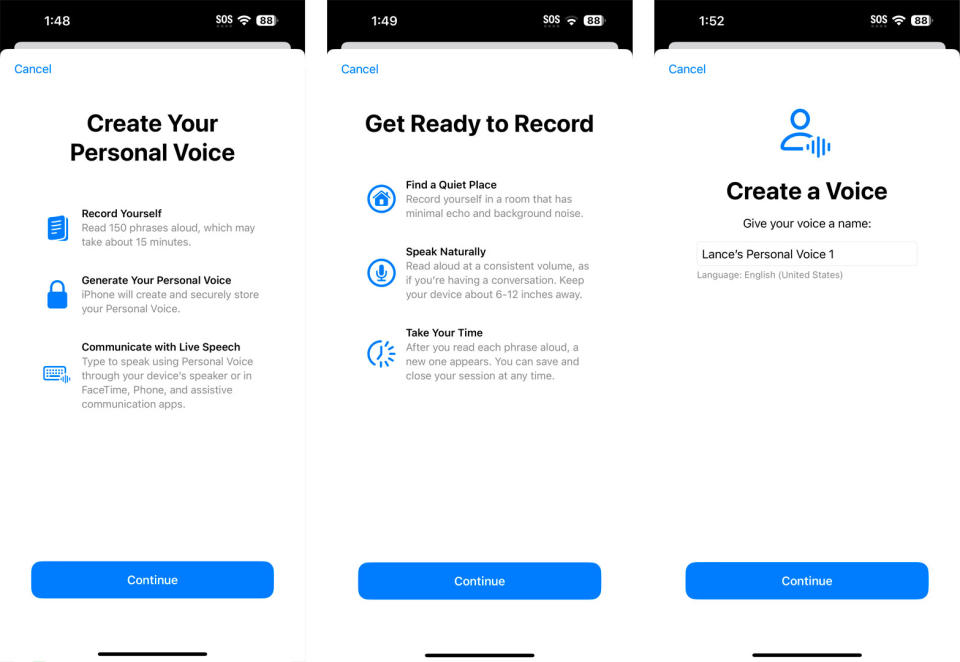

If you have been diagnosed with, for instance, Multiple Sclerosis or Cerebral Palsy, or any of the myriad physical conditions for which an augmentative communication device could help, personal voice could be a useful solution. Right now, alternative speech devices are specialized and cost hundreds of dollars, and no consumer device gives you speech that sounds like your own voice. If you are still able to record your own speech through the setup process, personal voice could be a way to communicate in a way that sounds more like you.

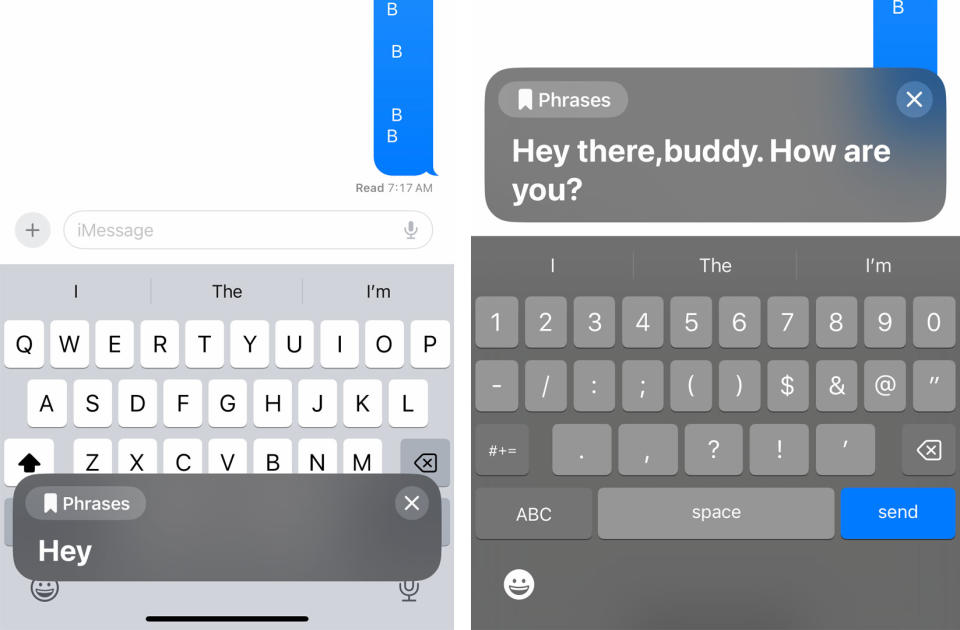

You can read about the personal voice setup process, and once you’ve gone through the long procedure, you can use your own voice as the main voice of your iPhone. You can also write phrases that your iPhone can speak and sound almost exactly like you, in tone if not inflection.

Of course, in these dawning days of deep fakes and generative AI, it's fair to be concerned about the ability to duplicate and fake someone’s voice using only an iPhone. For now, this is still a beta feature, and it is hidden deep within the Accessibility menu, so it’s too soon to guess at all the ways this might be used.

It’s too soon to worry about Personal Voice on iOS 17

I quoted a saying from a favorite New York City t-shirt ... and personal voice had no qualms

It would be terrible if the worrywarts and naysayers ruined this amazing opportunity for accessibility on the iPhone. For now, it seems unlikely that someone could effectively use the personal voice features to evil ends.

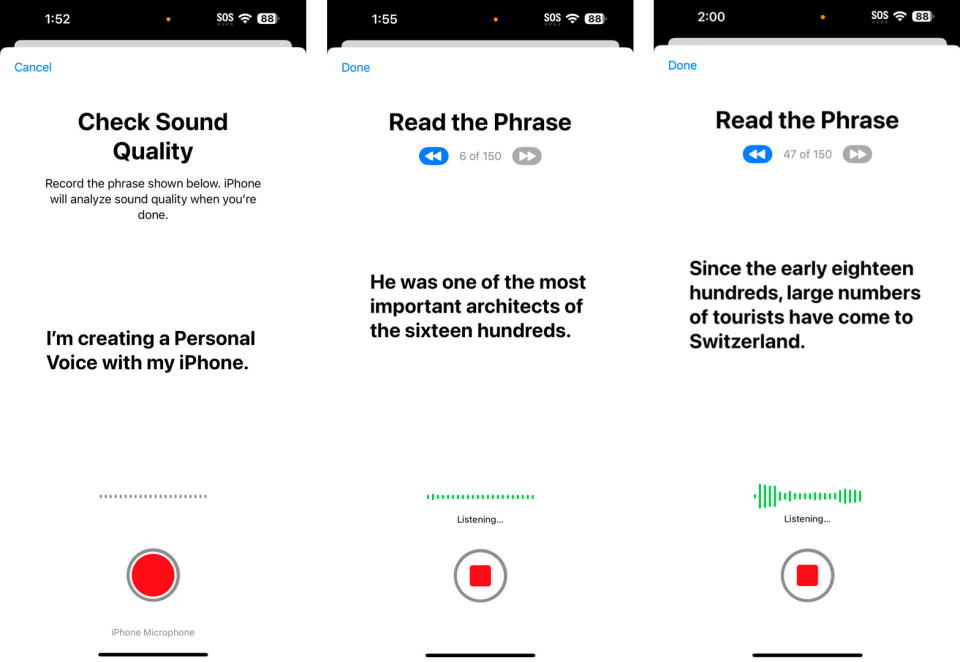

First of all, the setup takes a very long time. You need to read short phrases into your iPhone, in a good listening environment, for fifteen minutes total. The phrases seem randomly selected from a variety of text styles. It would be impossible to trick someone into performing the setup process for Personal Voice without their knowledge.

Second, your personal voice still sounds stilted and robotic. Many of the best voices Apple offers for Siri sound more clear and natural than the personal voice I created with my own voice. The inflection was off for many words, and it did not properly intone the punctuation at the end of sentences. You couldn’t tell if I was asking a question or raising an exclamation.

I didn’t bother to try to trick my friends and family with my personal voice. They would have to be inebriated if they failed to spot the imposter. I tried a variety of phrases, from very simple phone responses to the opening paragraph of Moby Dick. Nothing sounded the way I would say it normally, not perfectly. Every phrase sounded a bit off.

That said, it did sound like me. It was definitely my voice. I was able to use Personal Voice to say things that I definitely had never recorded during the setup. It pronounced words like “mellifluous,” “ratatouille,” and even “supercalifragilisticexpialidocious” with perfection, using my voice. Anything I could reasonably type on the keyboard, my Personal Voice would say.

That included curse words. I quoted a saying from a favorite New York City t-shirt: “F— you, you f—ing f—,” and Personal Voice had no qualms about filling in all the blanks I’ve left out above. I appreciate that. This is my voice, and I should be able to make it say whatever I want.

I tried using Personal Voice to issue commands to my dog, and she wasn’t convinced. My Beesly will sit when I tell her, but when Personal Voice was given “Beesly, sit!,” my dog ignored it. That’s not just a fun game to try, I think Personal Voice needs to have intonation that works as a dog command. If I’m losing my voice, even temporarily, I’d love to be able to communicate with my dog in a voice that she knows well.

This will be so good it’s gonna be scary

I’m excited about the future for people who need assistive technology like this, but I can’t help but also be very, very frightened of what this means for fraud and fakery. Right now, your Personal Voice is just as secure as your iPhone itself. If someone can’t break into your iPhone, they can’t steal your voice.

Apple keeps the Personal Voice information on your iPhone. You can even download all of the recordings used for the setup, if you want a library of your (or someone else’s) voice for later memories. Your Personal Voice will be available on all of your iCloud-equipped devices, like your iPad, but it isn’t stored in the cloud.

That’s for now, with Apple in the lead. Apple has the best reputation among consumer electronics companies for protecting the privacy of its users. Apple doesn’t make it easy to share your data, even with snooping governments.

What happens when other companies that are more ambitious and less scrupulous develop competing technology? What happens when using your own voice becomes a simple interface option, and not a more restricted Accessibility feature? In the future, it may be very easy for your smartphone to sound like anyone at all, and it’s easy to imagine how that goes wrong.

My father has been smart enough to ignore a scam plea for money from a so-called ‘friend’ asking for emergency help abroad. That’s a common scam, especially on Facebook, and he’s seen it a few times. What happens when he gets a phone call and it sounds like me, even able to respond in real-time to his worried questions?

While this technology is so useful that it must be available to those who need it, I worry about where this will lead, and the unknown is more frightening than anything I can imagine.

We need to be ready. We need to have code words and secret phrases and memories that only the two of us share, ready to prove our own identities. People need to know that this technology is here, and we should only use it with appropriate reservations.