StabilityAI drops Stable Audio 2.0 — here’s everything that’s new

StabilityAI has unveiled the second iteration of its artificial intelligence music generation tool, offering longer tracks, audio-to-audio support, and a greater commitment to protecting the copyright of creators.

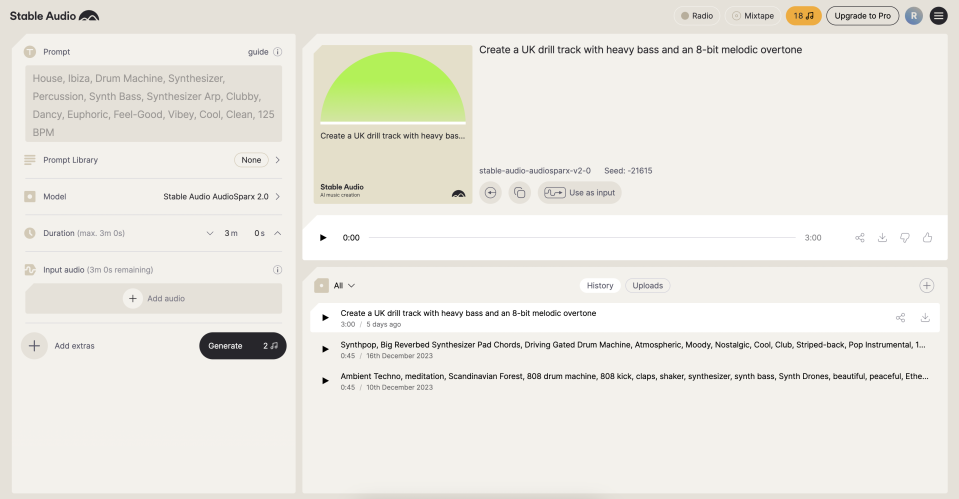

Stable Audio 2.0 allows users to create three-minute tracks at 44.1 kHz stereo by inputting a natural language processing prompt such as “A beautiful piano arpeggio grows to a full beautiful orchestral piece”, “Lo-fi funk” or “drum solo”. The AI-generated tracks include structured compositions like an intro, development, outro, and stereo sound effects.

Another new feature offered by Stable Audio 2.0 includes the ability to generate “fully produced samples” by uploading an audio file to the platform, evolving from solely a text-to-audio tool. For example, mimicking a drum sound with your voice would prompt the app to create an audio clip of a drum playing.

Taking copyright seriously

When using the new audio-to-audio feature, users must refrain from uploading copyrighted material under StabillityAI’s terms of conditions. It uses content recognition technology to ensure compliance with this policy and preventing any copyright infringement.

As with Stable Audio 1.0, the second model is also trained on AudioSparx’s vast audio file library of 800,000 music, sound effects, single-instrument stems, and text-based metadata. For AudioSparx musicians unhappy with the idea of their works being used for AI model training, they had the opportunity to opt out.

These reinforced copyright infringement and creator opt-out policies follow the recent departure of former VP of audio, Ed Newton-Rex. He announced his resignation in November 2023 with an X post that heavily criticized the company’s approach to upholding creator’s rights.

“I’ve resigned from my role leading the Audio team at StabilityAI, because I don’t agree with the company’s opinion that training generative AI models on copyrighted works is ‘fair use’,” he wrote.

He concluded his post by urging creators to voice their concerns to ensure tech companies “realise that exploiting creators can’t be the long-term solution in generative AI.”

Under the hood

In addition to longer tracks and audio-to-audio support, Stable Audio 2.0 sports a beefed-up architecture that facilitates the “generation of full tracks with coherent structures.” Adapting every component of the system has resulted in “improved performance over long time scale,” they claimed.

The tool features a new type of compressed autoencoder that creates shorter audio representations by compressing raw audio waveforms. Meanwhile, a diffusion transformer - similar to the one that powers Stable Diffusion 3 - can manipulate longer sequence data.

“The combination of these two elements results in a model capable of recognizing and reproducing the large-scale structures that are essential for high-quality musical compositions,” wrote Stability AI in a blog post.

The tool is free to use and available immediately.