How we test TV brightness at TechRadar

Brightness is only one aspect of a TV’s overall performance, but it’s the one that gets the most attention. There are two main reasons why brightness is important in the best TVs. The first is you’ll want its picture to compete with other light sources in a room such as lamps and daylight coming through windows – a higher level of brightness means you won’t see reflections from these sources interfering with the picture.

The second reason has to do with high dynamic range (HDR). TVs and movies with HDR can contain extremely bright highlights, ones exceeding 1,000 nits (a standardized unit used for measuring TV brightness, which is equivalent to cd/m2 or candela per meter squared) or even higher. To effectively convey that level of highlight detail, a TV must provide sufficient brightness.

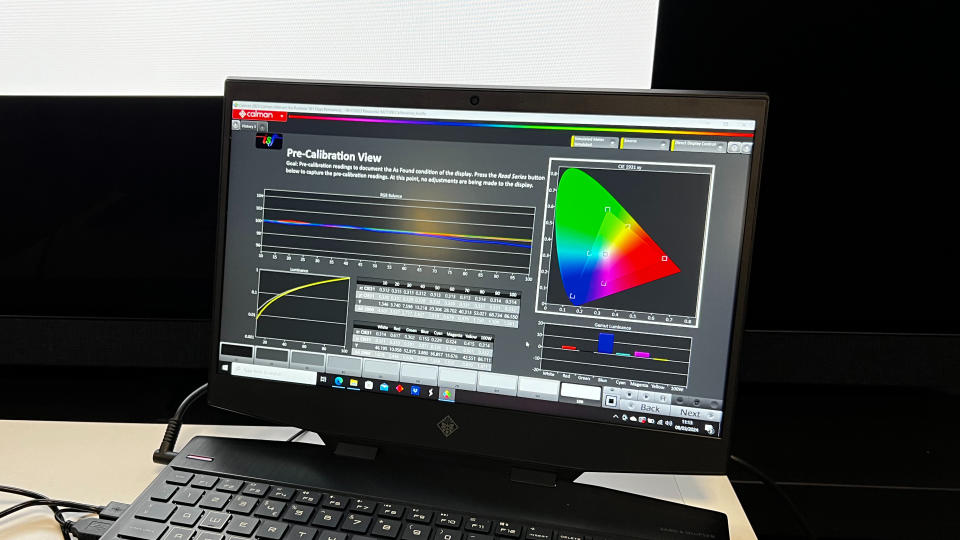

TechRadar provides two separate brightness measurements in our TV reviews: peak and full-screen, with results recorded in Portrait Displays' Calman color calibration software for both the TV’s Standard and most accurate picture preset (typically Movie, Cinema, or Filmmaker mode), because these will often offer different results, and viewers may prefer one or the other. Peak brightness is measured by a colorimeter (such as Portrait Displays’ C6) on a 100 IRE (full white) pattern output by a test generator (such as the Murideo 8K SIX-G) that covers 10% of the screen area. This measurement corresponds to the highlight portions of images and is used to determine a TV’s maximum brightness capability in most standard uses (we also test TVs in a 2% window, which can go even brighter and is usually what is used by manufacturers for their brightness claims, but this is less practical as a measurement for real-life viewing results).

Peak brightness is partly important to help create an accurate depiction of HDR movies and TV. These are commonly mastered for home release at a maximum brightness of 1,000 nits, so if a TV is capable of reaching 1,000 nits then it can show the full range included within that video. If a TV doesn’t reach 1,000 nits, then brighter tones must be brought down to a brightness level that the TV is capable of. This is called ‘tone mapping’, and how well a TV handles it is vital to the quality of its HDR images, so it’s preferable for tone mapping not to be required. The HDR format Dolby Vision is designed to handle tone mapping on dimmer TVs better.

Full-screen brightness is measured by a colorimeter on a 100 IRE pattern that covers 100% of the screen area. This measurement provides an idea of how bright the TV can get when displaying images with a high level of brightness over the whole image at once, such as sports and news programs. Pictures on a TV with high full-screen brightness will also stand up better in bright rooms where external light sources create screen glare (light reflections on the screen).

Many newer mini-LED TVs and OLED TVs can exceed 1,000 nits peak brightness, and in some cases, flagship mini-LED sets can measure as high as 2,000 nits. The full-screen brightness measurement for most TVs will typically be lower, especially for OLED models, which feature brightness-limiting circuits to prevent burn-in damage from overly bright, uniform content such as video test patterns.